F5 ACI Service Center

The document discusses network design, F5 design, and multi-tenant design from three angles when discussing load balancer design considerations and deployment options in F5 Cisco ACI, specifically with F5 BIG-IP. This document covers features through F5 Cisco ACI Release 5.2.

It is important to ensure that all traffic, with the exception of direct server return (DSR), which is exempt from this requirement, passes through the same load balancer when designing a network with load balancing. You can insert the load balancer using a number of different methods. The load balancer can be used in a number of ways, including as a gateway for servers and as a routing next hop for routing instances. To force the return traffic to go back to the load balancer, another option is to use licensed F5 ACI Service Center Policy-Based Redirect (PBR) or Source Network Address Translation (SNAT) on the load balancer.

F5 BIG-IP must see the return traffic in most designs because it is a stateful device, but using it as the default gateway is not always the best way to deploy it. Instead, the licensed F5 ACI Service Center can offer a better integration through the use of a feature called Policy Based Redirect (PBR).

Several high-availability deployment options are available for F5 BIG-IP. The two common BIG-IP deployment modes, active-active and active-standby, are covered in this document. Around each of the deployment modes, various design factors will be discussed, including endpoint movement during failovers, MAC masquerade, source MAC-based forwarding, Link Layer Discovery Protocol (LLDP), and IP aging.

Both Cisco ACI and F5 BIG-IP offer various multi-tenancy support options. A few methods for mapping multi-tenancy constructs from ACI to BIG-IP will be covered in this document. Depending on the BIG-IP form factor you use, the discussion will center around tenants, Virtual Routing and Forwarding (VRF), route domains, partitions, and multi-tenancy.

The management of the ACI fabric is carried out by the Cisco Application Policy Infrastructure Controller (APIC). ACI and F5 BIG-IP’s integration is bolstered by the licensed F5 ACI Service Center, an application that runs on the APIC controller. In conjunction with the joint deployment of F5 BIG-IP and Cisco ACI deployments, this document will explain how the licensed F5 ACI Service Center application can be used to gain operational benefits.

An overview of Cisco ACI

You can combine physical and virtual workloads in a programmable, multi-hypervisor fabric using Cisco Application Centric Infrastructure (Cisco ACI) technology to create a multiservice or cloud data center. The Cisco ACI fabric is made up of separate parts that function as switches and routers but is provisioned and managed as a single unit.

The Cisco Nexus 9000 series spine-leaf design serves as the foundation for the physical Cisco ACI fabric. Each leaf is a switch that connects to each spine switch and no direct connections are permitted between leaf nodes and between spine nodes. The spine serves as the high-speed forwarding engine between leaf nodes, and the leaf nodes serve as the connection point for all servers, storage, physical or virtual L4-L7 service devices, and external networks. The Cisco APIC oversees, manages, and oversees Cisco ACI fabric.

logical constructs used by Cisco ACI

The logical network and security are provisioned and monitored as a single entity in the ACI fabric rather than being configured individually on switches in a fabric.

A whitelist model is used as the foundation of the ACI solution’s security architecture. An endpoint group’s (EPG’s) or endpoint security group’s (ESG’s) communication is defined by a contract, which is a policy construct. By default, no unicast communication is possible between EPGs/ESGs without a contract between them. Communication between endpoints in the same EPG/ESG is permitted without the need for a contract.

Service Graph and Policy-Based Redirect (PBR) for Cisco ACI

By inserting Layer 4–7 service devices like a firewall, load balancer, and IPS between the consumer and provider EPGs, Cisco ACI’s Layer 4–7 Service Graph feature allows for this. Designing Layer 4–7 service devices in ACI does not necessarily require the use of the Service Graph, as long as the Layer 4–7 devices are inserted into the network using standard routing and bridging.

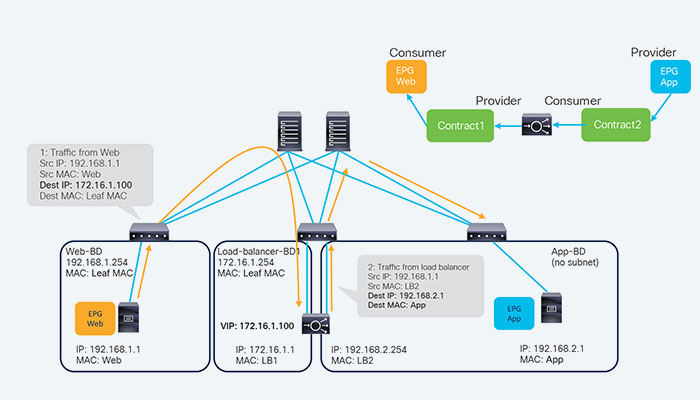

In the example using routing and bridging to insert a load balancer without the use of a service graph, see the below figure. The ACI fabric routes the VIP for incoming traffic from an endpoint in the consumer EPG. The return traffic from an endpoint in the provider EPG is simply bridged by the ACI fabric because the VIP is an ACI internal endpoint if the gateway of the server is the load balancer.

SNAT can be used by the load balancer to direct return traffic back to it if the servers and the load balancer interface are not in the same subnet. Although it is not required in this situation, using Service Graph has the following benefits:

- ACI offers a more sensible interpretation of service insertion between consumer and provider EPGs.

- Without the need for the service node to serve as the servers’ default gateway, ACI can route traffic to it.

- For virtual service appliances, ACI automatically connects and disconnects virtual Network Interface Cards (vNICs). VLAN deployment on the ACI fabric and the virtual networks for service node connectivity are managed automatically by the licensed F5 ACI Service Center.

One of the key benefits of the Service Graph is the PBR feature, which is useful for inserting Layer 4–7 service devices. ACI redirects traffic that matches the contract using this PBR feature without the use of routing or bridging. PBR can be utilized in load balancer designs to direct return traffic from servers to a load balancer that doesn’t perform SNAT.

Due to the fact that the VIP is also an internal endpoint of ACI, incoming traffic from a consumer EPG endpoint to the VIP does not require PBR. If the load balancer didn’t perform SNAT on the incoming traffic, PBR is needed for the return traffic from a provider EPG endpoint. The load balancer would not be able to see both directions of the traffic in the absence of PBR because traffic would return directly to the consumer endpoint.

F5 ACI Service Center Key Features

- FQDN-based nodes are supported for visibility.

- VIP dashboard support for MAC Masquerade setups.

- Tables describing visibility support the route domain.

- The application works with brownfield default gateway.

- Supported application upgrades for F5 ACI ServiceCenter.

- Excel reports for Visibility tables using the FASC UI and API.

- Visibility VLAN table supports Static leaf VLANs and Static ports.

- Support for VXLAN tunnel configurations in the node dashboard.

- The app’s features are all accessible to LDAP admin users who log in.

- Debug tool for tracking and quickly fixing (if necessary) backend tasks.

- Database import and export capabilities for F5 ACI ServiceCenter are available.

- VLAN Visibility: The self IP and interface information is displayed in the VLAN table.

- Support for hostname login for standalone BIG-IPs and high availability configurations.

- Dynamic attaching and detaching of APIC workload to BIG-IP pools using APIC notifications.

- Dashboard for visibility: Shows VIP and Node information, including APIC and BIG-IP information.

- Manage numerous BIG-IP (Physical, Virtual Edition, and vCMP) devices by adding and logging in to the devices.

- BIG-IP devices (Physical, vCMP) in the APIC fabric can self-discover themselves using the LLDP protocol.

- Visibility: Take a look at BIG-IP Network components like VLANs, VIPs, and Nodes and correlate them with APIC data like Tenant, Application profile, and End point groups.

- L2-L3 Network Management: Facilitates VLAN, Self IP, and Default Gateway Create/Delete/Update operations. L2-L3 Stitching between APIC Logical Devices and BIG-IP Device.

- Utilizing the F5 Automation and Orchestration toolchain (a declarative API model), L4–L7 Application Services allows for the deployment of feature-rich applications on the BIG-IP.

- Faults: Recording application-generated errors and warnings on the user interface to facilitate troubleshooting.

- Applications can be managed using the L4-L7 App Services Basic tab, which supports the FAST plugin.

- On a single BIG-IP or across multiple BIG-IPs, you can search IP. View the Node or VIP IP details that were searched.

- APIC Controller Information: Details about the APIC controller that the F5 ACI ServiceCenter is using. On this controller, F5 ACI ServiceCenter logs can be found.

- Field dependencies, property ordering, pattern matching, and other validations have FAST support.